Designing for Trust in AI era. | 매거진에 참여하세요

Designing for Trust in AI era.

#trust #data #info #ui #design #plan

It’s no longer just about what your product says—but how believable it feels.

We used to worry about not having enough information.

Now, we’re drowning in it.

Especially since generative AI entered the scene, the core UX problem has shifted.

It’s not about access anymore. It’s about credibility.

With just one prompt, users can generate hundreds of lines of analysis, summaries, even legal interpretations.

But is it true? Can we trust it? Who’s accountable?

That’s why today’s product teams need to ask not just what features AI can unlock—but how we can design for trust.

Enter: Trust-Oriented UI.

Generative AI ≠ Truth Engine

Let’s be clear. Models like GPT, Claude, and Gemini aren’t built to tell the truth.

They’re statistical engines that predict the most likely next word based on training data. That’s it.

Which means they’re fantastic at sounding right—but not always being right.

You’ve probably seen these hallucination cases:

- Summarizing academic papers… that don’t actually exist.

- Quoting experts… who never said what was attributed to them.

- Explaining laws… by inventing court cases out of thin air.

The worst part? Users often have no idea something’s wrong.

Which is why trust can’t just be assumed—it must be designed.

Trust Isn’t an Add-On. It’s a Design Layer.

One of the clearest responses to this problem is the rise of source-aware UIs.

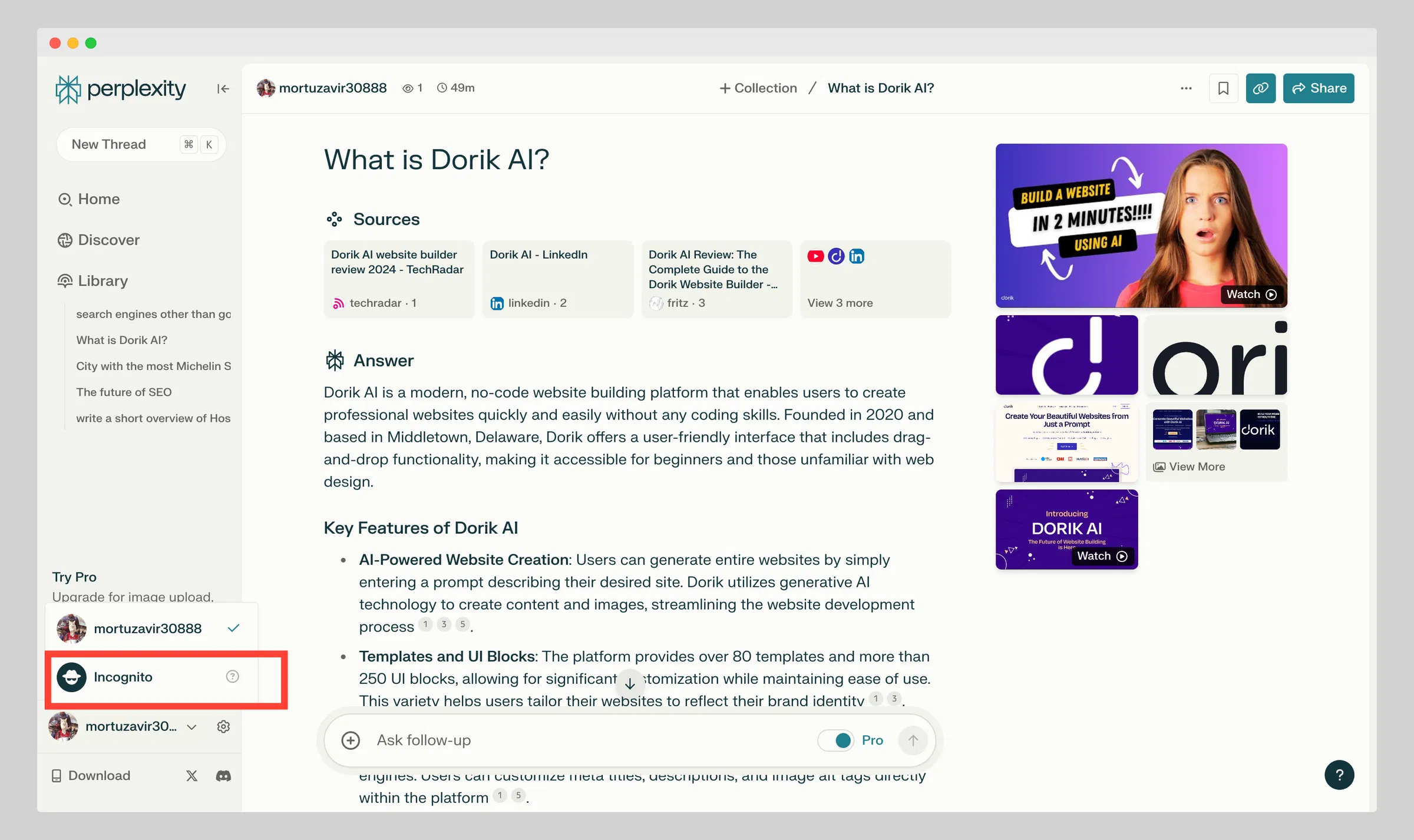

Take Perplexity.ai.

Every answer includes inline citations. Hovering over a sentence highlights exactly which source backs it up.

This isn’t just helpful—it reduces user anxiety. It visually proves that the system has nothing to hide.

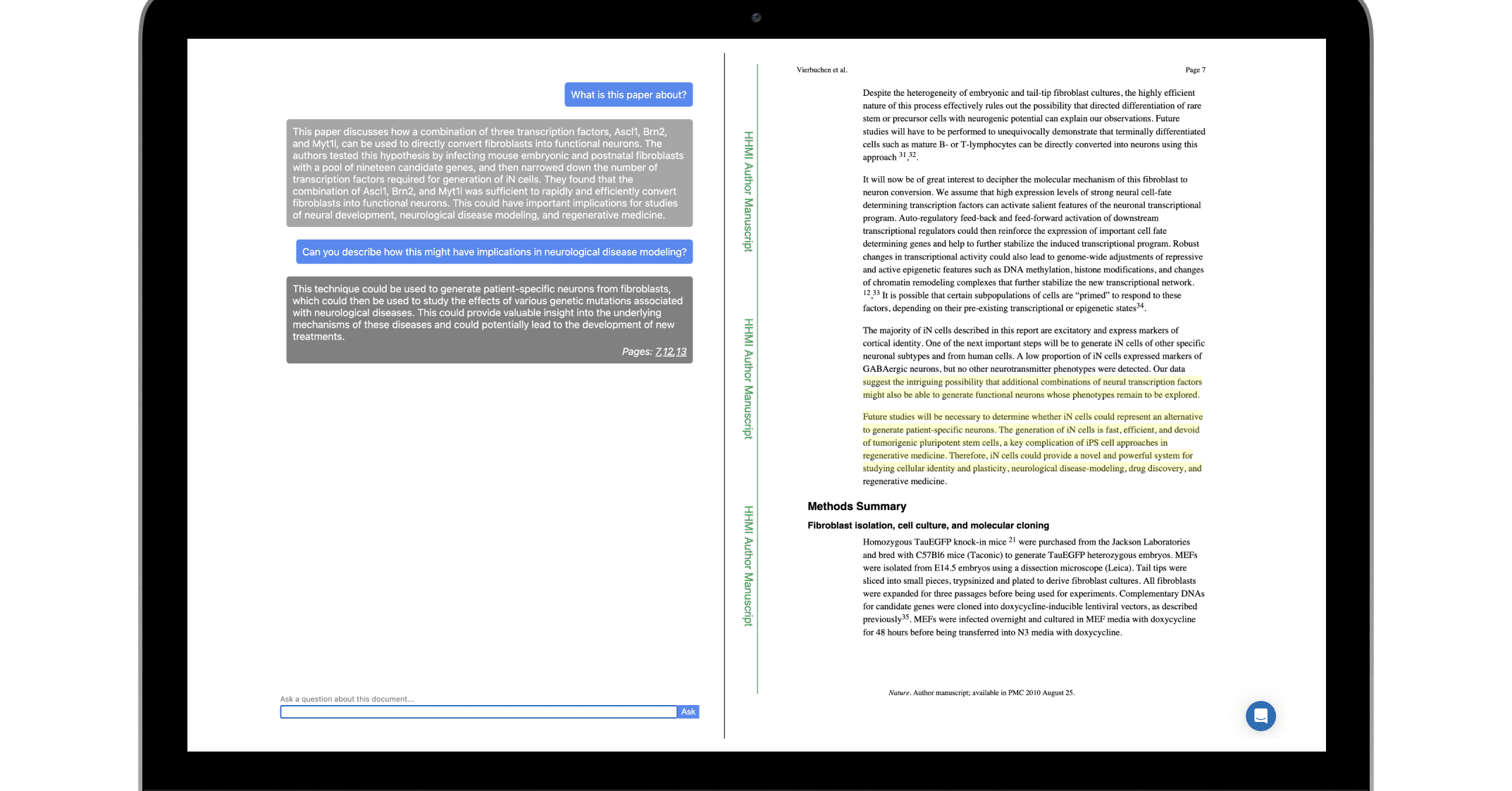

Or consider Humata.ai,

which summarizes PDFs while directly linking each sentence back to the original document.

It's not just a feature—it’s a bridge of trust between the user and the AI.

These aren’t just UX “nice to haves.” They are trust systems.

The 3 Core Components of a Trust-Oriented UI

If you're designing or planning an AI product, here are the essentials to get right:

1. Source Mapping, Not Just Linking

A list of URLs at the end isn’t enough. Build 1:1 visual correspondence between each fact and its source.

→ Use inline footnotes, hoverable highlights, or even color-coded references.2. Visualizing Uncertainty

If the AI is unsure, say so. Use UX elements to signal it.

→ “This information may be outdated” or “This is a model-generated guess.”

→ Transparency builds trust.3. User Feedback Channels

Let users act when trust breaks.

→ Click to flag errors, suggest better sources, or request a rewrite.

→ Make the trust loop interactive—not passive.

Trust Is Not a Feature. It’s a Feeling.

Think about how much we already trust machines without question.

We follow navigation apps blindly.

We copy-paste AI-generated emails without edits.

The problem isn’t that AI is unreliable.

The problem is that humans assume it’s reliable.

That’s why good trust UI interrupts that assumption—gracefully.

- A gray background on unverified text.

- A persistent label saying “Generated content.”

- A subtle button that reads “Not quite right? Ask again.”

These cues don’t get in the way—they build emotional safety.

Designing a New Visual Language for Trust

Most UI systems today are built for displaying information.

What we now need is a UI system built for qualifying it.

The future isn’t just about showcasing AI capabilities.

It’s about exposing its boundaries—with clarity and care.

Because trust isn’t delivered through text.

It’s delivered through design.

And tomorrow’s best products won’t just generate answers.

They’ll earn belief.

Trust UI note: bunzee.ai